A complete guide to edge SEO

A Complete Guide to Edge SEO: Everything You Wanted to Know

In the last few years, Edge computing has emerged as a type of cloud technology that sectors as diverse as civil engineering and video gaming have taken advantage of. It allows them to transfer data and carry out other types of changes on digital platforms much faster, without necessarily having the required manpower at hand.

And today, Edge computing is increasingly being used by SEOs as part of their range of tools to execute their campaigns – leading to the emergence of a strand of SEO referred to as Edge SEO. This blog aims to provide a complete guide to what Edge SEO is and how it can benefit you as an SEO professional.

In this blog you will learn:

A complete guide to optimising your website architecture for SEO

Your website architecture should be an essential part of your technical SEO strategy. Whether you’re working in-house or agency side, the content on your (or your client’s) website must have the foundations of a well-organised categorisation, by topic, in order for the content to be easily discovered, landed on and browsed by both search engines and users.

Moreover, focusing a part of your overall strategy on optimising your website architecture will actually also cover many of your other technical SEO efforts, such as effective internal linking, ease of crawlability and indexation by search engines and accurate keyword targeting. To name a few. So you’re actually covering a lot of your bases with just a single approach. Therefore, this SEO investment should most certainly not be overlooked.

It is for this reason that we’ve prepared this guide on how to optimise your website architecture for SEO. But before we get into the important steps and best practices, we need to first understand a few things, such as what a website architecture is and how it benefits a user’s experience on your site.

» Read more about: A complete guide to optimising your website architecture for SEO »

How to Analyse Brand & Non-Brand Organic Traffic

Reporting on organic traffic is one of the most fundamental metrics in SEO. It is one of the key indicators on how your site is performing and informs the recommendations that are a part of your overall strategy. However, one of the most basic missing elements to our traffic data is how much of our organic performance is based on queries that are brand related or from non-brand related terms.

This guide will show you:

- How to extract search Google Search Console query data directly into Google Sheets using the Search Analytics for Sheets extension

- Segment the data into brand and non-branded traffic

- Present the data in a way that is most useful to you and your clients.

Knowing how much of our traffic is brand or non-branded can be essential in deciding your SEO strategy moving forward. If there is too much reliance on branded keywords then your site is missing out on the significant opportunities in search volume that non-branded keywords have to offer. But, while this may seem obvious and common knowledge, we may not be privy to this data for various reasons. One of the main reasons could be is we don’t have the necessary tool, or process, to segment organic traffic into brand and non-brand.

There is Google Search Console which is available to all site owners and SEOs. You could use GSC to download your query data and then filter it into brand and non-brand. But, the downside to this platform is that you are only able to extract data up to 1,000 rows.

However, an alternative tool that is also accessible to everyone is the Search Analytics for Sheets extension for Google Sheets. The main difference this tool provides compared to Google Search Console is you are able to extract data well beyond the 1,000 query limit. Moreover, the data loads directly into Google Sheets. So no more having to export the data as a CSV and then loading it into Sheets and then formatting it to your liking. This is especially frustrating when you have a large amount of data, which could take a considerable amount of time to load.

Therefore, it is for these reasons that the Search Analytics for Sheets extension is an ideal choice for any SEO looking for a free tool to use to segment their traffic into brand and non-brand. Besides this tool, all you need is Google Sheets itself, with the use of a few nifty formulas to highlight the total branded and non-branded share. (You can find all the formulas in our free template, as part of our guide, here) And with this data you can then begin to pull beneficial insights to inform your SEO strategy and recommendations.

» Read more about: How to Analyse Brand & Non-Brand Organic Traffic »

Optimising Your eCommerce Site for JavaScript SEO

Read our blog post, here at Re:signal, & discover 10 ways to optimise your eCommerce site for JavaScript, from indexation to on-page SEO. Learn more here.

MUM: A Guide to Google’s Algorithm Update

MUM’s the Word: Everything You Wanted to Know About Google’s Algorithm Update

An algorithm update from Google landed in May called MUM, which stands for Multitask Unified Model. Earlier this year, Google announced this update as the latest advancement to their search engine’s capabilities – and so a new chapter of search was opened to us.

But, before going on to discuss MUM any further, we have to go back to 2017 and discuss Google’s machine learning journey, to understand the latest search algorithm update in its full context.

Transformer, Google’s Machine Learning Model

MUM, like other language AI models which are a part of Google’s AI ecosystem, was constructed on a neural network architecture. This neural network architecture was invented by Google themselves and then later made open-source, which they call Transformer.

One of the most prominent capabilities that the Transformer architecture has demonstrated is that it can produce a model which has been trained to recognise multiple words that make up a sentence or paragraph, and understand how those words relate to one another. Another way to understand this is that it can recognise the semantic significance of words to one another, and potentially predict what words will come next. This was illustrated in a research paper that Google published entitled “Attention is All You Need”. You can read this paper to learn about the Transformer architecture in more detail.

Now, neural networks are at the forefront when approaching language understanding tasks, such as language modelling, machine translation and quick answers.

We can see the presence of this Transformer architecture in language models such as Lamda, which stands for Language Model for Dialogue Applications, and is used for conversational applications, such as chat boxes, as well as Google’s search algorithm update BERT, the predecessor to MUM, released back in October 2018. BERT was announced in an earlier research paper published by Google AI Language, entitled “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding”.

Before MUM There Was BERT

BERT, which stands for Bidirectional Encoder Representations from Transformers (no, this wasn’t referring to the cute character from Sesame Street, sorry to disappoint) was a neural networking-based technique, which Google applied to Natural Language Processing, NLP, pre-training. The advent of BERT ushered in their Transformer model and NLP to be a mainstay of Google search moving forward.

BERT was able to help Google achieve many advancements in search. One of the key advancements was its ability to better understand the intent behind the language and the context of words in a search query, thus being able to return more relevant search results.

An example of this is if you took the query “math practice books for adults”

» Read more about: MUM: A Guide to Google’s Algorithm Update »

How to Optimise Your Website for Core Web Vitals: LCP, FID & CLS

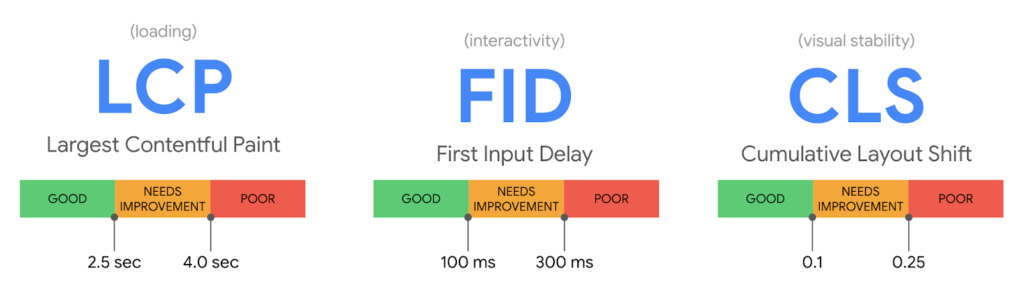

We’ve recently released a blog post on how to report on Core Web Vitals using the CrUX dashboard in Data Studio. Within that post, we discuss how the CrUX dashboard is an essential tool to use, due to the availability of field data and historical data over the past months to report and monitor your progress to keep in line with Google’s page experience update.

However, that’s only half the story. Now that you have the right tools to provide you with the most accurate data on your site’s Core Web Vital performance, you now need to know how to optimise your website to fall in line with Google’s standards, achieve a positive score and provide a better page experience for your users.

To do so, it is important to establish straight away that the actions that you will need to carry out will require a developer or development team, depending on the size of your website. Since you will need to alter CSS stylesheets, JavaScript script files and other elements pertaining to the programming of a website, You will require the expertise of a developer who knows how to write code and make the changes recommended in this blog post.

It is also worth pointing out the tools necessary to identify Core Web Vital elements on a web page, before you can actually start the optimisation process. The two most useful tools available are PageSpeed Insights and Chrome DevTools. These platforms are a must-have in your task to improve your Core Web Vitals scores. They are both free, easily accessible and are designed to diagnose Core Web Vital behaviour on a web page.

Now that we know which tools to use to diagnose Core Web Vital issues, we can begin our recommendations to optimise for Core Web Vitals. Let’s start by discussing the first Web Vital, Largest Contentful Paint.

Largest Contentful Paint

There are different elements that can be considered as the largest contentful paint on a web page,

- An image, such as the hero or background image

- H1 tag

- A block of text

However, typically the LCP is the image visible in the viewport.

As mentioned above, we can identify the LCP on a page by using either PageSpeed Insights or Chrome DevTools. To discover what the LCP is using PageSpeed Insights, simply enter the URL of the web page into the address bar.

» Read more about: How to Optimise Your Website for Core Web Vitals: LCP, FID & CLS »

How to measure Core Web Vitals in Google Data Studio with CrUX

The Core Web Vitals update is upon us and it is worth taking a look at how we as SEOs can be best prepared for their impact. Not only should we be fully aware of what it is but also what tools are available to us to report accurately and infer meaningful insights to recommend to our clients for a better user experience.

In order to do so, one of the most beneficial tools is the Chrome User Experience report, or more casually known as CrUX for short. CrUX is a public dataset collected from over four million websites, by Google, from actual real user experiences, known as field data. Field data is considered more reliable and a better representation of your site’s performance in contrast to lab data.

The data is stored in BigQuery, Google’s online data storage warehouse and can be retrieved using SQL queries.

Not only does the CrUX dataset report on the three Core Web Vitals, Largest Contentful Paint (LCP), First Input Delay (FID) and Cumulative Layout Shift (CLS), but also on diagnostic metrics such as the First Contentful Paint (FCP) and Time to First Byte (TTFB), as well as user demographic dimensions like device distribution and connectivity distribution.

Image credit: web.dev

» Read more about: How to measure Core Web Vitals in Google Data Studio with CrUX »