What is Technical SEO And How Can You Improve It?

Google uses over 200 factors to judge and rank web pages according to SEO research, and around 20% of these factors are based on your website’s technical health. So working on improving your technical SEO is important. But what does that mean these days? And what are the most important tech SEO factors?

What is Technical SEO?

Technical SEO is a type of search engine optimization that is all about improving the infrastructure of your website. As the name suggests, it involves optimising the aspects of your website that affect its technical health, from page speed to URL structure.

The aim of these optimizations is to help search engines to crawl, index, and ultimately rank your content while also providing a better user experience to searchers. So, to really understand how to work on your technical SEO, you need to understand how search engines work.

How Search Engines Work

Search engines, like Google, need to discover all of the pages on the internet, understand the content on them, and decide which ones best match each search query made by its users. For context, there are 5.6 billion searches made every day, so Google needs to be efficient, accurate, and fast.

To do this, search engines work in three steps:

-

Crawling

Google sends robots, called Googlebots, to discover all the pages on a website. Googlebot does this by starting from the homepage and following all of the links until it runs out of time.

-

Indexing

Once Googlebot has collected all the web pages that it can find, it takes a snapshot of them and returns to Google, where the content is processed, understood, and stored in an index next to other related pages.

-

Ranking

When someone makes a search, Google will look through its index to find the most relevant pages. It will then rank these results by relevance using complex algorithms.

Technical SEO is about making this 3-step process as efficient and slick as possible to give your content the best opportunity to rank.

How To Improve Your Site’s Technical SEO

When it comes to working on your site’s technical SEO, you need to consider how you can make it easy for Googlebot to access your site, find all the content, understand all of the content, and know when to rank it. This involves optimising several aspects of your website, including:

- Site architecture

- URL structure

- Robots.txt

- XML sitemaps

- Structured data

- 301/302 redirects

- Canonical tags

- Hreflangs

- Noindex / Nofollow

- Structured Data

- PageSpeed optimization

Site Architecture

Tip: Use a Flat, Organised Site Structure

Site architecture is how your web pages are structured and linked in relation to one another. This can include internal links, category structure, menu navigation, and more.

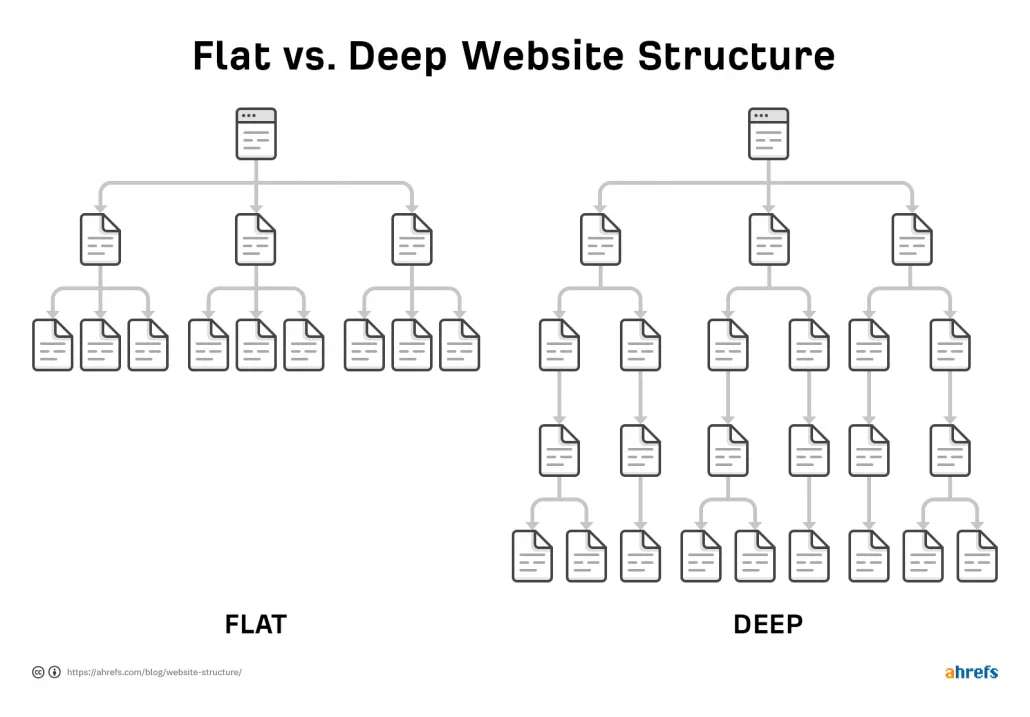

Generally, there are two main types of site structure, only one of which is more effective for SEO and users.

Deep site structure: the majority of pages are multiple clicks from the home page, resulting in them receiving less PageRank

Flat site structure: the majority of pages on a site are a minimal amount of clicks from the home page

The most optimum structure for search engines is to have a flat site structure, with all pages easily accessible in just a few clicks. Not only does this help search engine bots but it also creates a good user experience, makes sure your content isn’t competing against each other, and encourage crawling of your most important content.

URL Structure

Tip: Use a Consistent URL Structure

A good website structure must compliment a consistent URL structure, with the page path being featured in its URL. The URL of a page should be clear and be reflective of “where” it is on your site in relation to other pages.

Most successful websites have URLs that follow a consistent, logical structure. Putting your pages under different categories gives Google extra context about each page in that category.

Robots.txt

Tip: Make use of your robots.txt

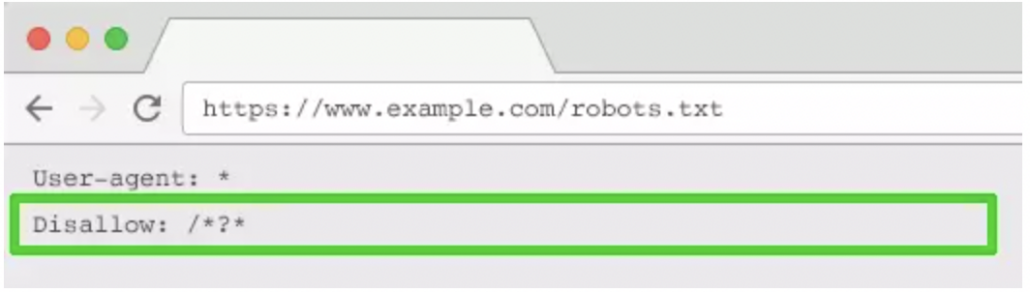

To help search engines know how to find content on your site, a robots.txt sits on your domain to act as a set of instructions. It contains the necessary directives to tell search engine spiders how they should behave and which pages or sections shouldn’t be crawled.

You can identify pages or sections of the site that shouldn’t be indexed and include them in the robots.txt file with the directive:

Disallow: [path]

You can specify each instruction for specific search engines by addressing:

User-agent: [bot name]

Or for search engines using:

User-agent:*

XML Sitemap

Tip: Use an XML Sitemap

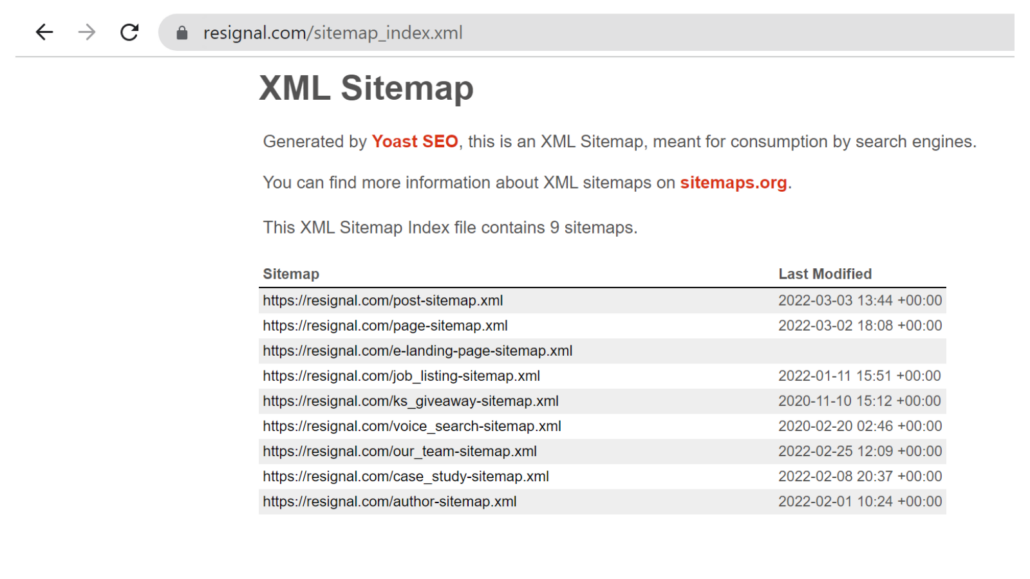

An XML sitemap is a list of all the live pages of your site. It can be split into multiple lists that mirror your category structure, e.g. one sitemap for products, another for blogs. Or it can contain all URLs on your website in one.

The aim of an XML sitemap is to help search engines to discover all of your pages, so your XML sitemap should be present on your root domain using a standard URL path,

www.yourdomain.com/sitemap.xml

To help bots to find your XML sitemap, you should also signpost its location in the robots.txt file. You can also submit your sitemap in the Google search console as it’s important that search engines find it. A Google representative recently stated that XML sitemaps are the “second most important source” for finding URLs.

301/302 redirects

Tip: Use permanent redirects for URL changes

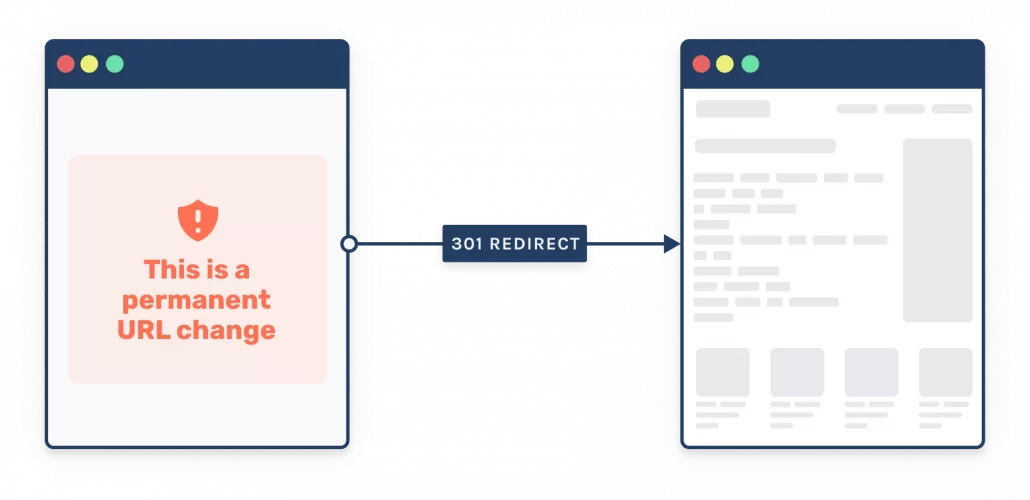

A redirect is used when the URL or a page changes, or the page has been removed. It is a way of forwarding the visitor to another relevant URL while preventing 404 error pages, which make for a bad user experience and can hinder search engine crawling.

There are two main types of redirects:

301 redirects

301 response code indicates a permanent redirect, which means that the old page should be removed from the index and not ranked.

A 301 redirect will therefore pass any value from the old URL to the new one, which includes link juice from backlinks.

However, if the old page is reinstated or reused, you can still remove a 301 redirect in your CMS.

Image credit: Sam Underwood – Visualising SEO

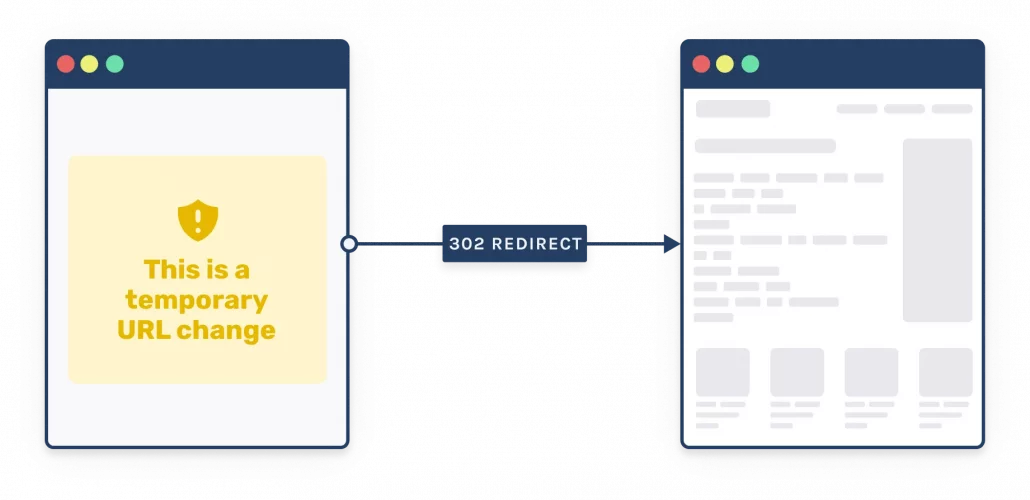

302 redirects

A 302 response code indicates that the redirect is temporary, which means that the old page will return and so should be kept in the index ready to rank when it returns.

In this case, all of the SEO value is kept in the old URL.

Image credit: Sam Underwood – Visualising SEO

Noindex / Nofollow

Tip: Use noindex tags to reduce index bloat / keep unnecessary pages from Google

As well as your robots.txt file, you can give Google instructions on how to treat pages in the HTML code of each URL. Noindex and nofollow tags are examples of such directives.

The noindex tag instructs Google to not index and show a page in search results, while a nofollow tag tells Google to ignore a link as it should not pass PageRank signals. However, both of these directives are hints and Google can choose to ignore them.

Canonical tags

Tip: Use Canonical Tags

Canonical tags are another robots directive that sits in the HTML of each URL. They are a hint to search engines to indicate which page is the primary version of the content when it is duplicated across multiple URLs.

Although duplicate content is never advisable, sometimes it is unavoidable and so canonical tags can help to prevent any issues and/or penalties.

Just make sure that you use canonical tags to indicate which URL is the primary one.

HREFLang Tags

Tip: Use HREFlangs to distinguish between region/language

If you have multiple versions of a page for different languages or regions, you need to tell Google about these different variations using HREFLang tags, which are another type of robots directive that sits at the page level.

These work similarly to canonical tags, as they are a way to prevent duplicate content issues, however, HREFLang tags work by indicating which the most appropriate version of each page is depending on the users’ language or region.

HREFLang tags can help to make sure that users in one country are shown their country’s version of the site, rather than another country’s version.

Structured Data

Tip: Use Structured data to help win Featured snippets for better CTR

Another page-level markup you can use to help search engines understand and rank your content is structured data, which is sometimes referred to as schema markup.

The idea of structured data is to pinpoint types of information in your content, like phone numbers, recipe steps, and other key information. Using structured data to markup your content can give some of your pages more opportunity to rank in featured snippets, as Google can quickly and easily understand your page content.

Featured Snippets stand out in the SERPs, they can dramatically improve your organic click-through rate.

Pagespeed Optimisation

Tip: Improve Page speed for better UX and organic rankings

How quickly your site loads and becomes interactive is now an important ranking factor for Google. So it not only affects how quickly Googlebot can crawl your website but also how well your pages rank. Improving your page speed, therefore directly impacts your site’s rankings.

That’s because faster pages are more efficient, helping to provide a better UX and convert higher. Pages with long load times have higher bounce rates and lower average time on page, so Google prefers to give its users the best experience by sending them to websites that they will find easy to use.

You can evaluate your page speed with Google’s PageSpeed Insights and find opportunities and details for improvement.

Key elements that are mostly considered for improving page speed are:

CDNs for content/images

Cache

Lazy loading

Minifying CSS.

Elements often ignored when proposing improving page speed are:

Reduce Web Page Size

A large-scale page speed study found page’s total size correlated with load times more than any other factor.

Test load times with/without CDN

The same study showed CDNs were associated with worse load times because many CDNs aren’t set up correctly. We recommend webpagetest.org with the CDN on or off to test your site’s speed

Eliminate 3rd Party Scripts

Each 3rd party script that a page has adds an average of 34ms to its load time. Some of these scripts (like Google Analytics), you probably need. But it never hurts to look over your site’s scripts to see if there’s any that you can get rid of.

Key resources to test/validate PageSpeed

Google’s PageSpeed Insights (it’s the most detailed tool but it doesn’t always answer all the questions so alternatively, you can use:

(GTMetrix Grade + Actionable insights on Core Web Vitals + Waterfall / Video/ History and page details )

(Actionable insights on all Core Web Vitals + Waterfall and performance from CrUX report)

However, a fast-loading site alone will not move you to the top of Google’s first page, but improving loading speed can make a significant dent in your organic traffic.

Summary

Working on improving the technical health of your website by making optimizations in each of these areas is an important step in helping to improve how well your pages rank in Google.

However, working on optimising your technical SEO in isolation is not likely to make significant improvements in your organic ranking. To really make an impact that lasts, you should incorporate multiple aspects of SEO into your strategy, including technical, on-page, and off-page. Read our Introduction To SEO to learn more about building all three aspects into your strategy.