MUM’s the Word: Everything You Wanted to Know About Google’s Algorithm Update

An algorithm update from Google landed in May called MUM, which stands for Multitask Unified Model. Earlier this year, Google announced this update as the latest advancement to their search engine’s capabilities – and so a new chapter of search was opened to us.

But, before going on to discuss MUM any further, we have to go back to 2017 and discuss Google’s machine learning journey, to understand the latest search algorithm update in its full context.

Transformer, Google’s Machine Learning Model

MUM, like other language AI models which are a part of Google’s AI ecosystem, was constructed on a neural network architecture. This neural network architecture was invented by Google themselves and then later made open-source, which they call Transformer.

One of the most prominent capabilities that the Transformer architecture has demonstrated is that it can produce a model which has been trained to recognise multiple words that make up a sentence or paragraph, and understand how those words relate to one another. Another way to understand this is that it can recognise the semantic significance of words to one another, and potentially predict what words will come next. This was illustrated in a research paper that Google published entitled “Attention is All You Need”. You can read this paper to learn about the Transformer architecture in more detail.

Now, neural networks are at the forefront when approaching language understanding tasks, such as language modelling, machine translation and quick answers.

We can see the presence of this Transformer architecture in language models such as Lamda, which stands for Language Model for Dialogue Applications, and is used for conversational applications, such as chat boxes, as well as Google’s search algorithm update BERT, the predecessor to MUM, released back in October 2018. BERT was announced in an earlier research paper published by Google AI Language, entitled “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding”.

Before MUM There Was BERT

BERT, which stands for Bidirectional Encoder Representations from Transformers (no, this wasn’t referring to the cute character from Sesame Street, sorry to disappoint) was a neural networking-based technique, which Google applied to Natural Language Processing, NLP, pre-training. The advent of BERT ushered in their Transformer model and NLP to be a mainstay of Google search moving forward.

BERT was able to help Google achieve many advancements in search. One of the key advancements was its ability to better understand the intent behind the language and the context of words in a search query, thus being able to return more relevant search results.

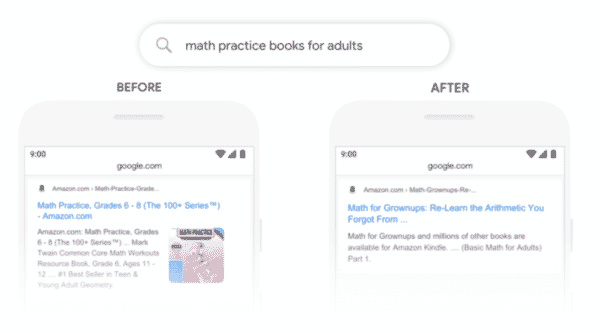

An example of this is if you took the query “math practice books for adults” before BERT was applied, Google would return results for math books for grades 6 and 8. But, with BERT, Google was able to actually understand the context and nuances implied in the sequence of words from the query and return much more accurate results. Now, Google would show a book of math for grownups, matching the user’s query more closely, as seen in the image below:

Image Credit: Search Engine Land

This helped Google to better understand the context of words, as well as the intention behind those words, when a user made a search.

And now, finally, this is where MUM comes into the picture. MUM takes over where BERT left off and goes beyond what we could have ever imagined with BERT. What separates MUM from BERT, first and foremost, is that MUM is 1,000 times more powerful.

Now that we know a few things about the foundations of where MUM is coming from, in terms of what model it was built upon and how it will succeed where BERT left off, we can now talk more about what MUM actually is and how this update to the Google algorithm will change the search landscape even further, what impacts this will have on the nature of search and, of course, what consequences this will have on the domain of SEO.

As previously mentioned, MUM is 1,000 times more advanced than BERT. But what does this actually mean?

Well, MUM is going to take the advancements made by BERT in understanding words, context and user intent, and move even further ahead by being able to understand complex search queries, almost like a conversation, across languages, that includes different modalities.

One of the main issues that MUM tries to address, as Pandu Nayak, a Google fellow and Vice President of Search, writes, is to reduce the number of searches a user needs to make in order to get to the answer that they need. So, rather than searching, for example, eight times, which is the average number of times a user would need to search to fulfil a complex task, they would simply need to search only once, to retrieve all of the information they require to satisfy their search query.

This was further illustrated when Prabhakar Raghavan demonstrated MUM and its capabilities at Google’s I/O conference this year. He gave an example scenario of what a user might want to find out on Google, “You’ve hiked Mt. Adams, Now you want to hike Mt. Fuji next Fall, and you want to know what to do differently to prepare.” The most striking observation about this scenario is that this doesn’t sound like a query you could search in Google. That’s because it is not. At least not yet. It sounds more like a query you would pose an expert in hiking when asking for advice. And with the MUM update, that is exactly what Google is trying to achieve.

As discussed earlier, to retrieve a complete, satisfying answer, you need to make several individual search queries to find it. For example: the elevation of the mountain, the average temperature in Fall, difficulty of the hiking trails, and more.

However, let’s say you actually were talking to an expert hiker. You could simply ask a question such as “what should I do differently to prepare?” They would have in-depth knowledge on the subject of your question, as well as take into account the subtle elements that your enquiry contains, in order to give all of the necessary information you would need, not just from the question you posed, but from any question they think you might need the answer to, without you having even asked them yet.

This is what Google means when they want to reduce the number of searches down to a single query, and with that single search, provide a robust answer that takes into consideration all of the nuances of your question, and any potential follow up questions that you might have to ask individually.

But how will MUM actually be able to carry this out? To answer this question, let’s look at the two main facets of the MUM update in much closer detail, which are:

1) Its ability to search and retrieve information across different languages, as part of the same NLP technology BERT is based on, which would entail predicting what subsequent questions the user could ask and preemptively answering those questions, as well as the capability to retrieve information from a variety of different sources.

2) Understand a query based on different types of media.

1. MUM is Multilingual

As discussed, Natural Language Processing will continue to play a significant role moving forward. So much so that now the MUM update has been trained to recognise 75 different languages, as well as generate them. As mentioned before, this will drastically change the way Google returns information back to a user. What MUM will enable us as searchers to do, is to understand languages in all of its many forms. But they don’t want to just understand different languages, they also want to be able to understand what users are asking better as well, in return be able to give a more detailed, rich answer.

Continuing with the example of hiking Mt. Fuji, we already mentioned MUM is trained in 75 different languages, but what it can also do is source information from sites, in a language with more detailed and relevant results than the language you initially made your search in, and translate it for you.

So for example, if there was more beneficial information to answer your search query, regarding hiking Mt. Fuji, in the Japanese language, then you probably wouldn’t find that information yourself unless you actually searched in Japanese to begin with. But with MUM’s ability to source knowledge across languages and markets, you could potentially see results, such as “where to enjoy the best views of the mountain” or even local souvenir shops you should visit, which would only have been available to you if you searched in Japanese, thus breaking down any barriers held by language when it comes to search.

In other words, Google will become fully language agnostic, meaning the language the user would search in and the original language of the site, which Google retrieved its answer from, would no longer matter. It would be completely irrelevant.

However, we should still remember that relevancy will still be the key factor in deciding what information is shown, above everything else.

And as Google will become more advanced to be able to source information across multiple languages, so will its comprehension of what is relevant information and what is not. Therefore, relevance will still continue to play a significant role.

Baidu’s ERNIE 3.0

On a side note, it is also worth mentioning that other search engines have produced their own natural language processing capabilities, as well. For example, Baidu, in China, has a similar technology which they have so affectionately called Ernie 3.0 (and no this is also not in reference to the other character from Sesame Street. Sorry to disappoint again).

This means that Google is not the only entity in the market with NLP as a distinguishing playing card and there is somewhat of a race going on between the major search engines in the world.

Now that we have discussed how NLP and MUM’s language comprehension abilities will allow the search to go beyond its current language-based limitations and potentially realise a fully language agnostic future, let’s move on to discuss the second facet that will allow MUM to execute complex searches, which is related to MUM’s ability to search with multiple different media forms, such as image and video.

2. MUM and Multimodality

The search landscape is evolving and soon information from other modes of media will become integrated as well, such as images and videos. This is where MUM comes in. MUM will be able to understand information from images, videos and other formats, simultaneously. Google gives the example of how a user could eventually be able to take a picture of their hiking boots and ask, “can I use these to hike Mt. Fuji?” The MUM update will then be able to draw the connection between the photo of your hiking boots with the question you just put forward and answer if they are suitable or not, as well as return a link to a page with other useful hiking gear.

Image credit: blog.google

These advancements, included as part of the MUM update, will allow Google to carry out much of the complex search queries that they have predicted. In fact, MUM could potentially enable Google to retain users on the search results page for considerably longer, than what is currently measured, as they will be able to provide a thorough and in-depth answer to a user’s search query, almost via conversational discourse, potentially leaving the need to click through to a web page unnecessary.

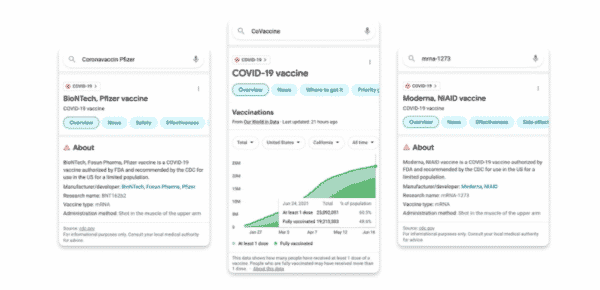

A practical case of MUM’s advancements was put forward by Google, when trying to identify the different variations in vaccine names to tackle Covid-19. From “AstraZeneca” to “CoronaVac”, Google recorded around 800 different terms to refer to vaccine names. Google wanted to make sure that relevant and beneficial information would return whenever someone searched for a variant of a vaccine name, and with the MUM update’s abilities, they were able to do so much more easily.

Image credit: blog.google

Google recorded 800 variations for vaccine names in more than 50 languages, all in a matter of seconds. They applied the knowledge they acquired to Google search, so when a user was to search for a vaccine name, in a term of their choice, Google would be able to return authoritative and reliable information to that user, regardless of what language that user searched in or in what market.

With all of these new capabilities of the MUM update, we then need to ask ourselves the question, what does this mean for SEO? Let’s first address the elephant in the room. It seems with every algorithm update, talk always resumes on if this is the death of SEO.

And with the MUM update, it is no different. Some have speculated that this time, it could indeed mean the end of SEO, citing the reason that as Google moves further and further away from users simply relying on keywords to make a search and closer to more conversational dialogue with Google, as more of a personal human assistant, influencing ranking based on keywords could become obsolete. However, this is only speculation, and the ground reality is that Google will still need to draw information from content that is relevant and queries will inevitably still contain keywords within them. Therefore, relevant content will continue to be pertinent as well as targeting keywords.

So, I’m sure you already knew, but this is not the end of SEO. Rather, SEO has simply evolved. This is the origin of a different kind of SEO, and we as SEO’s need to change and adapt with it. Most certainly, we need to adapt our SEO strategy for the MUM update.

We can do so by focusing on one main, but crucial element: content.

What Can We Do Now to Adapt to the MUM Update?

Content, Content, Content

Emphasise EAT

First, what this means for SEO in a language agnostic future is that content will continue to play a significant role in determining the rankings of web pages.

But not just any content. Content that prioritises expertise, authority and trustworthiness, EAT, at its core. As Google continues its objective to be the directory to the internet, and as previously discussed, now has the desire to put forward comprehensive, targeted knowledge that will reduce the need for a user to search for individual elements to a greater topic of thought, content that is well researched, vast and credible, in other words, that will answer all of the asked and potential could ask questions of a user, will be prioritised.

This is the content that will and always has ranked well in Google’s search results pages. And in the era of MUM, it is no different. If anything, EAT content will play an even greater role.

Include Sources to Add Authority to Your Content

Second, what will also help contribute to the authority of your content is to base that content on thorough research, but also to include links, if you are not already, to the sources of that research. Including references to your research will help Google to recognise the authority and credibility of your content, much like how a research paper includes citations in the academic arena. A classic expression from the field of computer science, which goes by “you put garbage in, you get garbage out,” illustrates this point well. And, since Google makes a great effort to show relevant and trustworthy content over poor content that is garbage, references to the thorough research that you carried out is another way to demonstrate that your content is trustworthy.

More Localised Content

Third, aside from expert content, content needs to capture the local nuances of your market. Google will no longer just continue to seek out authoritative content in your market, it is going to be seeking out content from different languages and various markets. As Google will be able to carry out direct translations of sites in other languages to match a search query, the competition to rank for that given search query will increase.

But, what will separate your content from others are the cultural references, pre-existing knowledge and intricacies that Google will not be able to replicate from a direct translation of another website from another market. Translation will not replace localisation. Localised content will continue to remain the best approach to providing content in other markets.

Invest in Local Copywriters

Finally, now that we have established that localised content will be more pertinent with MUM than ever before, an additional approach to support your efforts to create rich, local content is to invest in local copywriters. The cultural understanding that a local writer has is something Google has yet to be able to replicate. Therefore, they should be a favoured part of your content strategy.

Now, let’s take a deeper look at what this all means, practically, for the different kinds of websites that populate the internet.

First, let’s deep dive into what this means for multilingual websites.

MUM and Multilingual Websites

For websites with a version of their site in a different language and targeted at different markets, then, as mentioned, localised content needs to prevail over direct translations. For such sites, localised content will be necessary to rank successfully. This specifically relates back to what was mentioned about the need for localised content, as in the MUM era of search, Google will be able to present users with information from across different markets and languages, but will not be able to understand the cultural nuances of those markets, giving you the advantage.

In contrast, campaign strategies that prioritise direct translation over localisation will inevitably suffer, as Google will be able to do direct translations of content, possibly to a much higher standard of accuracy, than what is on your site, thus your advantage of having your content translated into another market’s language will be gone. As a result, websites that have localised content will be necessary and be able to rank well for searches in other markets.

Moving on, let’s consider what MUM will mean for single language websites.

MUM and Single Language Websites

Websites with authoritative content and robust backlinks have a stronger chance of being a source of information in another market, as part of Google’s language agnostic future.

However, as alluded to earlier, what this also means is that you will have more competition. Since websites will be competing against other websites across markets, the competition will be much higher. Your expertise in a local market may not be enough to help you to rank in the geo-relevant search market, thereby losing visibility instead.

Overall, localised content with an emphasis on EAT is what will prevail and is the key to any SEO strategy moving forward under MUM’s fold. This is what will help your site to continue to rank not just in your market, but as Google moves further towards a language agnostic future, EAT will help to rank in other markets as well, and most importantly, best answer a user’s search query.

Final Thoughts

What Can We Expect?

Some final thoughts to conclude: MUM will change search in many profound ways, moving away from just intent-based phrases and keywords but breaking down linguistic barriers and understanding complex requests and questions, the likes of which we have not yet seen. As a result, we can potentially expect to see some drastic impacts on SEO as well. Some changes that have been predicted include:

1) We could potentially expect to see more 0 click searches, as Google will be able to return the answer to a user’s question at the search results page, users may feel the need to click through to a web page less and less.

2) We can also expect to see much more competition when ranking for a particular search query as pages from different markets, in various languages, could be returned if they best answer the user’s question, thus widening the competition.

3) Finally, another result of the MUM update is that we could witness less search volume for keywords in general. As users need to do less research to get the answers they seek, this could correlate with a potential drop in search demand.